Professionally I've been working in Outlook Copilot and building experiences to leverage the LLMs in the email flow. I've been learning more about the technology itself and peeling the layers to get more understanding.

One aspect I was curious about how can I finetune an LLM model for a narrow scenario. The toy scenario I picked was having a model that corrects a sentence for grammar, spelling, punctuation, capitalization, etc.

Output: Leaves rustled softly in the autumn breeze.

I will go over all the steps from data generation, fine tuning and then using that model leveraging LLaMA.cpp on my Mac.

Before we go in details, following are the validation results.

| Model | Rouge-2 | Bleu 4-gram |

|---|---|---|

| 3B Fine Tuned - Q4_K | 0.911872 | 0.890461 |

| 3B Fine Tuned - Q8_0 | 0.904628 | 0.879871 |

| Dolphin 2.0 mistral 7B - Q8_0 | 0.872627 | 0.804831 |

| 3B Fine Tuned - Q2_K | 0.814925 | 0.7469 |

| StableLM Zephyr 3b - Q8_0 (base) | 0.648531 | 0.159785 |

The various steps involved were

- Creating a dataset, which will be used for training and validation

- Deciding the metrics used to evaluate

- Creating a baseline with existing models

- Fine tuning using the LoRA

- Serving model using LLaMA.cpp with GGUF conversion

Dataset creation

Once you have the requirements of the problem you are trying to solve and also evaluating that LLMs is the right approach then to finetune you would need to create a dataset. If you already have a dataset that is clean and of high quality then awesome but I'm assuming that's not the case.

In my scenario, I was able to generate synthetic dataset. The beauty of having more powerful LLMs is that you can use them to generate data to train the smaller language models. I went through the following process.

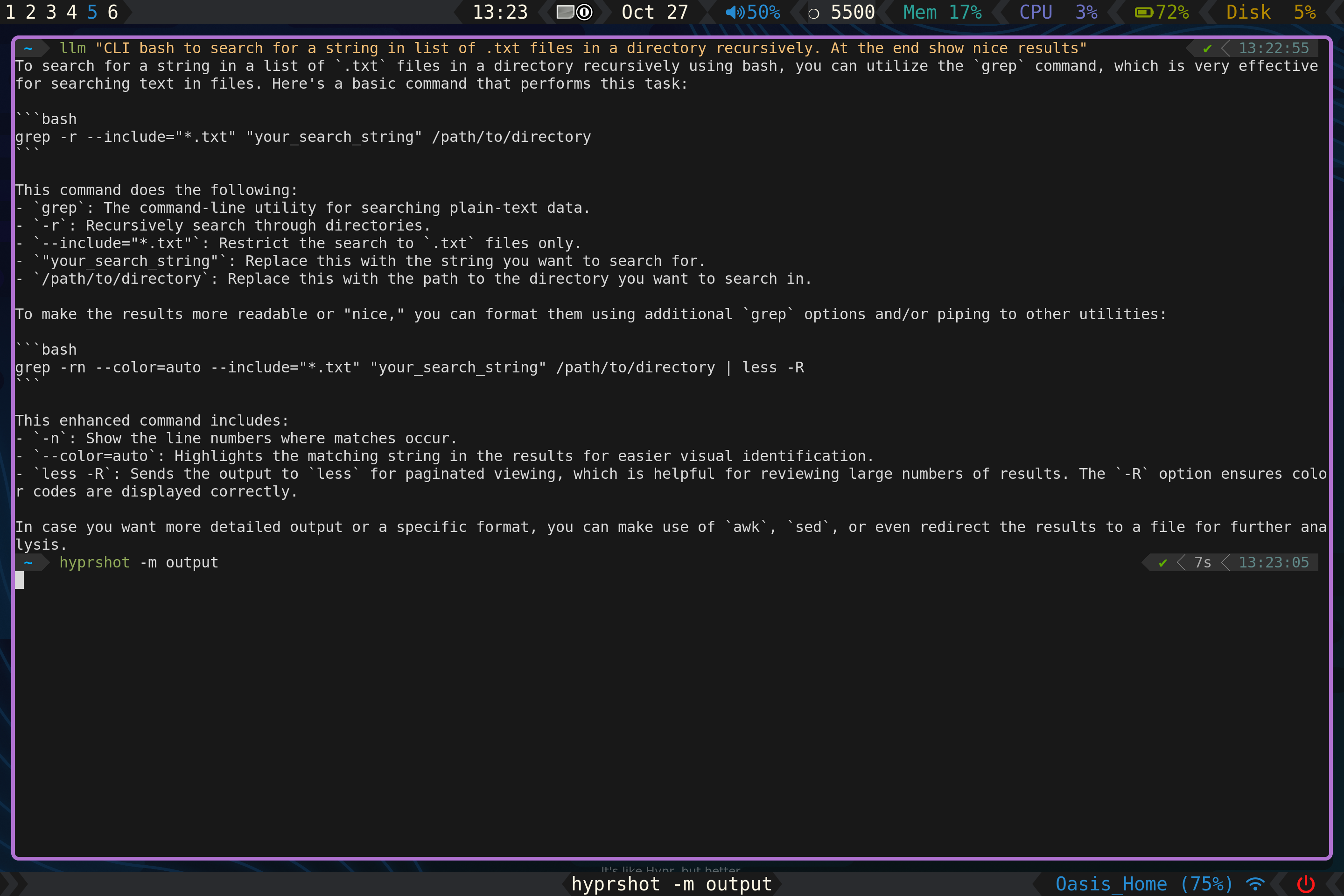

First, I created a prompt in a playground with the more powerful LLM of my choice and tried out to see if it generates both incorrect and correct sentences in the way I'm expecting.

Once I had that, the next step was to make them parsable so I leveraged the ability of these powerful models to output JSON (or XML). Using this I was able to generate approx. 100 samples. This was done in a zero shot way to create my bootstrapping dataset which will be used to generate more similar samples. You should go over these bootstrapped samples thoroughly to check for quality of data.

From reading and learning about the finetuning process, quality of dataset is one of the most important aspect so don't just skimp over it.

Once I had the initial bootstrapping dataset I created a Python script to generate more of such samples using few shot prompting.

Few shot prompting: In the prompt to generate data, I also give few examples of the data so it can generate more similar samples.

Following is the prompt I used to generate bootstrapping dataset and then later updated it to contain examples.

Generate unique sentences of varied length between small and long length. Some of them should also contain multiple. For each of those now write them in a way where a person who is not good at typing and types very quickly with partial and incorrect words will write but still being close to the intended sentences.

# Guidelines to follow:

* Create {TOTAL_LENGTH} such examples.

* Don't prefix them with number.

* Include examples from various domains such as science, math, literature, social media, slang etc.

* Create a diverse set of sentences, some containing all the way from only one error to all the way to errors across the sentence.

* Each of them should have numbers in it but keep the number same.

* Add various variety of errors e.g. typos, homophones, grammatical mistakes, omissions, capitalizations, and transpositions to accurately reflect real-world mistakes.

Always returns response in JSON the following format. The **array should have {TOTAL_LENGTH} items**.

```json

{

"DataArray: [

{

"Correct": "The correct string",

"FastTyped": "The fast typed string"

},

{

"Correct": "The correct string",

"FastTyped": "The fast typed string"

}

]

}

```[

{ incorrect, correct}

]

But if I changed it to return the following then it complied

{

data: [{ incorrect, correct }]

}

Using this approach, I was able to create a dataset with approx. 2000+ samples.

I then further generated synthetic data to add random capitalization issues, partial sentences etc. This was done so I don't pigeon hole my data to only complete sentences with only grammatical issues i.e. adding diversity to my dataset so it can work for wide set of scenarios.

Lastly you can put all of this in Pandas Dataframe and split it into training, validation and test set and save it so you can use it in training process.

If you created further synthetic data, as I did with captialization and partial sentences, then make sure that each of train, validation and test set contain and consistent number of such data e.g.

# Split the dataframe into train, test and validation sets with equal fraction of rows according to 'PartialSentence', 'LowerCase' and 'RandomCase' columns

train_df = df.groupby(

list(df.columns.difference(['FastTyped', 'Correct']))

).apply(lambda x: x.sample(frac=0.7, random_state=seed))Selecting metrics and baseline

You need to form a baseline so that you can empirically measure that if your finetuned model is actually doing better or if it became worse.

Hugging Face has a good inventory of various metrics along with a guide of choosing the metric. I liked the Hugging Face evaluate library, which is a good one stop shop for many of the metrics. The metrics I decided to evaluate were the following

- BLEU: It attempts to evaluate the quality of the machine generated text with the ground truth (our target correct) using n-gram overlap.

- ROUGE: ROUGE-L attempts to measure the longest common subsequence between generated text and ground truth while ROUGE-N uses an N-gram overlap approach.

- Exact Match: This matches if the generated text is exactly as the target text.

The BLEU and ROUGE are more flexible as they are not binary score and evaluate based on quality and how it deviates from target. I added exact match at the mix to see how much it exactly gets right.

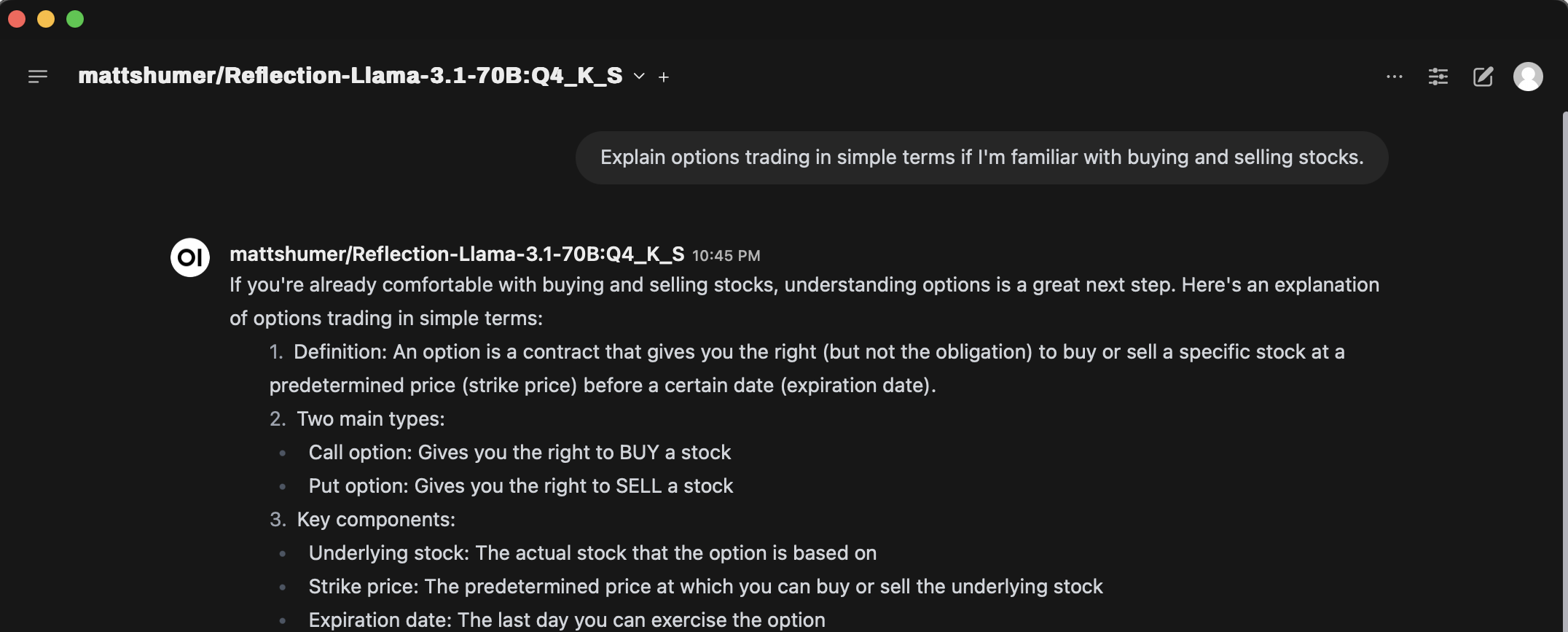

Creating a baseline with existing models

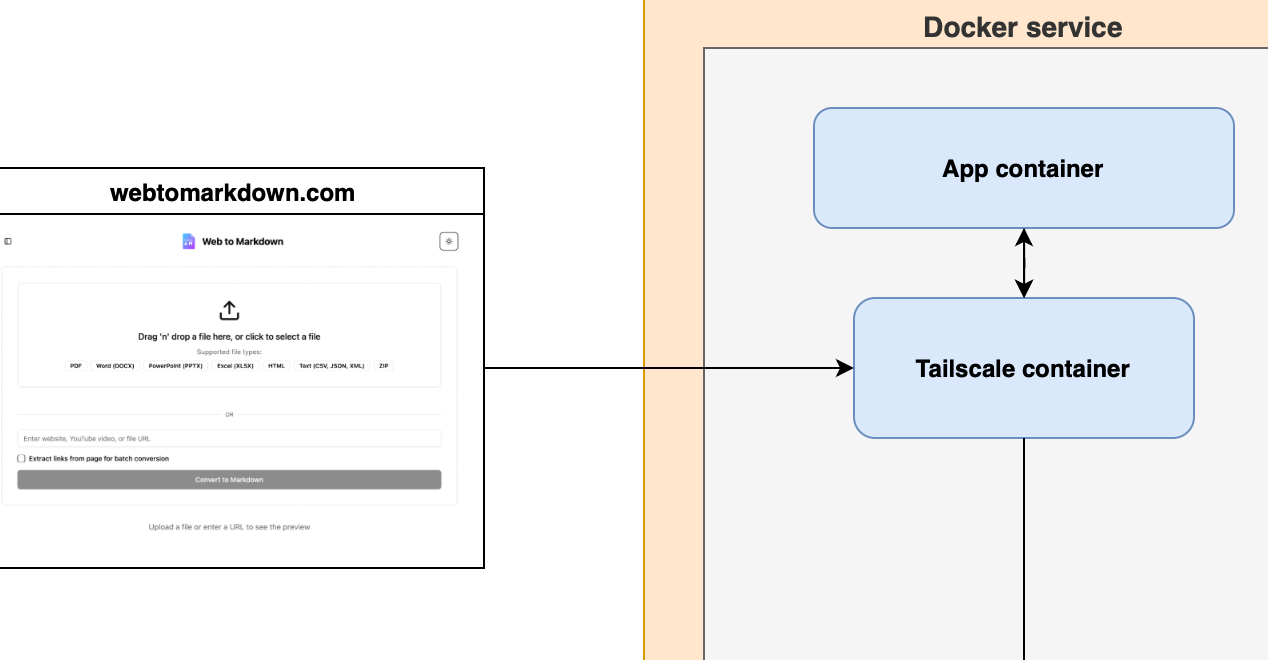

Once you figured these, the next step was to create a baseline with existing models. I choose the Mistral 7B (Q8), Stable LM Zephyr 3b (Q8). How I ran the evaluation was that I downloaded the GGUF and ran it using LLaMA.cpp server which supports the OpenAI format. Then I used python to create my evaluation script and just point the openai.OpenAI API to URL that was localhost, being served by LLaMA.cpp.

./server -m ~/.cache/huggingface/hub/models--TheBloke--dolphin-2.0-mistral-7B-GGUF/snapshots/3b345ee148d25b2da209c6166e855dc4845fcb4e/dolphin-2.0-mistral-7b.Q8_0.gguf -ngl 999The script skeleton looked like the following

client = openai.OpenAI(

base_url="http://localhost:8080/v1", # "http://<Your api-server IP>:port"

api_key = "sk-no-key-required",

)

def process_row(row, model_type):

completion = client.chat.completions.create(

model="gpt-3.5-turbo",

messages=[

{"role": "system", "content": SYSTEM},

{"role": "user", "content": get_prompt(row['FastTyped'], model_type)}

],

temperature=0,

seed=SEED

)

return completion.choices[0].message.content

def evaluate_model(...):

...

rouge = evaluate.load('rouge')

rouge_score = rouge.compute(predictions=predictions, references=references)

...Store these results for your test, validation data.

Fine tuning using the LoRA

Low-Rank Adaptation aka LoRA is a technique used to finetuning LLMs in a parameter efficient way. This doesn't involve finetuning whole of the base model, which can be huge and cost a lot of time and money. LoRA, instead adds a small number of trainable parameters to the model while keeping the original model parameters frozen.

You don't have to write the code frome scratch, rather there are already tools available that will help you kickstart the whole thing. The one I used was the lit-gpt from Lightning AI. There are other alternatives that you can also try e.g. Axolotl. I'll use lit-gpt for this tutorial.

Clone/Fork the lit-gpt, as you will be copying and adding some scripts to fit your need.

Prepare dataset for using in finetuning

Copy scripts/prepare_alpaca.py and rename it to something relevant to your project. In that program I updated the generate_prompt function to use the instruction template that Zephyr3B uses. As by default the instruction template it has was Alpaca style which looks like

Below is an instruction that describes a task, paired with an input that provides further context.

Write a response that appropriately completes the request.

###Instruction:

{example['instruction']}

### Input:

{example['input']}

### Response:While Zephyr3B has the following instruction template.

<|system|>

{example['instruction']}<|endoftext|>

<|user|>

{example['input']}<|endoftext|>

<|assistant|>Its important to use the right instruction template otherwise the model may not generate responses as expected. You can generally find the instruction template supported by models in the Huggingface Model Card, at least for the well documented ones. If you are using some esoteric model which doesn't have that info, then you can see if its a finetune of a more prominent model which has those details and use that.

I also made some changes to the prepare function in that file to change the destination and checkpoint path, along with removing some of the things I didn't need e.g. doing a train/validation split, as I already had my own split done earlier so I just reused them.

You can see what I used here scripts/prepare_corrections_ds.py

Finetuning script

Copy the finetune/lora.py and rename it to something relevant to your project. Here I also changed the directions for checkpoints, output and where my data is. I also added a Weight & Biases (if you haven't used it, I would recommend checking it out) logger as that helps me keep tabs on how things are going.

The main change here to do is that in validate function, I picked a random sample from my validation data and use that to check the loss as the model gets trained. This way I was able to see how its progressing.

Start the finetune

Once I had all these setup, all I needed was an environment with GPUs to use for finetuneing. I opted for paperspace.com. Once you have the prepared data and the scripts downloaded you can then run them as follows.

First we download the model and convert it into format that lit-gpt works with

python scripts/download.py --repo_id stabilityai/stablelm-zephyr-3b --from_safetensors=True

python scripts/convert_hf_checkpoint.py --checkpoint_dir checkpoints/stabilityai/stablelm-zephyr-3b/Prepare the dataset using your scripts

python scripts/prepare_alpaca_copy.pyAnd finally start the finetuning. If you added wandb, make sure you have setup using CLI and added the credentials.

python finetune/lore_copy.pyContinue to monitor the training and once its complete then you should be good to start using it.

Using your finetuned model

I like working with LLaMA.cpp as it works on multiple platforms, is pretty performant and comes with a lot of customizability. To run model on LLaMA.cpp you have to convert it to GGUF format.

The model you finetuned stored the LORA weights separately, so first you need to merge it with base model so you can have one model that contains both the base model and your finetune on top of it. lit-gpt already comes with scripts to do that.

python scripts/merge_lora.py \

--checkpoint_dir "checkpoints/stabilityai/stablelm-zephyr-3b" \

--lora_path "/notebooks/corrections-slm/lora/corrections/lit_model_lora_finetuned.pth" \

--out_dir "/notebooks/corrections-slm/lora/corrections/merged"

This will take the base model (checkpoint_dir), combine it with your finetune (lora_path) and merge it (out_dir).

Then you would need to convert this merged model to Huggingface model

python scripts/convert_lit_checkpoint.py \

--checkpoint_path "/notebooks/corrections-slm/lora/corrections_run_2/merged/lit_model.pth" \

--output_path "/notebooks/corrections-slm/lora/corrections_run_2/merged/model.bin" \

--config_path "/notebooks/corrections-slm/lora/corrections_run_2/merged/lit_config.json" Here it takes your merged model (checkpoint_path), converts to Hugging Face model (output_path) and uses the config to set certain parameters (config_path).

P.S: I haven't explored much on what the config currently contains and what it all means.

Now finally, in LLaMa.cpp there is convert-hf-to-gguf.py script that you can use to convert the previously converted Huggingface model to GGUF.

pip install -r requirements-hf-to-gguf.txt

python convert-hf-to-gguf.py /notebooks/corrections-slm/lora/corrections_run_2/merged/Now you shohuld have a GGUF model that you can use LLaMa.cpp to run

main --model /notebooks/corrections-slm/lora/corrections_run_2/merged/ggml-model-f16.gguf -p "<|system|>\nFix the text.<|endoftext|>\n<|user|>whts gng on<|endoftext|>\n<|assistant|>"I am still learning how to better prepare dataset, train models and evaluate them. So please take it as a beginners path I took to do it and I'm sure there are much better ways to go about this, and I'll continue my journey to learn them :).